Netflix processes videos through a three-stage microservices pipeline: Ingest (receive and validate source files), Processing (split into chunks, encode in parallel across hundreds of EC2 instances using VMAF quality metrics), and Distribution (deliver via Open Connect CDN). Each title gets a custom encoding ladder optimized for its content complexity.

Netflix streams to 280 million subscribers across 190 countries. Every movie and TV show you watch has been processed by their video pipeline before it reaches your screen.

This is not a simple upload. Each title gets encoded into dozens of different quality levels. A single movie might produce over 1,200 video files. All optimized for different devices, screen sizes, and network conditions.

The engineering behind this is impressive. Netflix rebuilt their entire video processing system from a monolithic application into microservices. They open-sourced VMAF, which is now the industry standard for measuring video quality. And they do it all at massive scale on AWS.

This post breaks down how their pipeline works and what software developers can learn from it.

The Scale of Netflix Video Processing

Before diving into architecture, let me put some numbers on what Netflix handles:

| Metric | Value |

|---|---|

| Subscribers | 280+ million |

| Countries | 190 |

| Titles in catalog | 17,000+ |

| New content hours per year | Thousands |

| Encodes per title | 100+ variants |

| Quality metric | VMAF (open-source) |

Every piece of content needs processing before it can stream. Original productions, licensed content, and localized versions all go through the same pipeline.

The challenge is not just encoding videos. It is doing it reliably, quickly, and at consistent quality across thousands of titles.

The Three Stage Pipeline

Netflix’s video processing follows three main stages. Each stage is a separate system that can scale independently.

flowchart TB

subgraph Stage1["fa:fa-download STAGE 1: INGEST"]

direction LR

I1[fa:fa-upload Source Files] --> I2[fa:fa-search Inspection] --> I3[fa:fa-check-circle Validation]

end

subgraph Stage2["fa:fa-cogs STAGE 2: PROCESSING"]

direction LR

P1[fa:fa-cut Chunking] --> P2[fa:fa-video Encoding] --> P3[fa:fa-puzzle-piece Assembly]

end

subgraph Stage3["fa:fa-globe STAGE 3: DISTRIBUTION"]

direction LR

D1[fa:fa-database Storage] --> D2[fa:fa-server CDN Push] --> D3[fa:fa-tv Ready to Stream]

end

Stage1 --> Stage2

Stage2 --> Stage3

style Stage1 fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style Stage2 fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style Stage3 fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style I1 fill:#bbdefb,stroke:#1565c0

style I2 fill:#bbdefb,stroke:#1565c0

style I3 fill:#bbdefb,stroke:#1565c0

style P1 fill:#ffecb3,stroke:#f9a825

style P2 fill:#ffecb3,stroke:#f9a825

style P3 fill:#ffecb3,stroke:#f9a825

style D1 fill:#c8e6c9,stroke:#388e3c

style D2 fill:#c8e6c9,stroke:#388e3c

style D3 fill:#c8e6c9,stroke:#388e3c

Let me explain what happens at each stage.

Stage 1: Content Ingest and Inspection

How Content Arrives

Content comes from multiple sources. Netflix Originals come from production studios. Licensed content comes from distribution partners. Each source delivers files in different formats.

Netflix uses the Interoperable Master Format (IMF) for content delivery. IMF is an industry standard that separates video, audio, and metadata into distinct files. This makes it easier to handle multiple languages and versions.

flowchart TB

subgraph Sources["Content Sources"]

S1[fa:fa-film Production Studios]

S2[fa:fa-building Distribution Partners]

S3[fa:fa-globe Localization Teams]

end

subgraph IMF["IMF Package"]

direction LR

V[fa:fa-video Video Essence]

A[fa:fa-volume-up Audio Tracks]

T[fa:fa-closed-captioning Subtitles]

M[fa:fa-file-alt Metadata]

end

subgraph Ingest["Ingest System"]

I[fa:fa-download File Receiver]

end

S1 --> I

S2 --> I

S3 --> I

I --> V

I --> A

I --> T

I --> M

style Sources fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style IMF fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style Ingest fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

Automated Inspection

Before processing begins, every file goes through automated inspection. The system checks:

| Check | Purpose |

|---|---|

| Format validation | Ensure files meet technical specifications |

| Audio sync | Verify audio and video are aligned |

| Corruption detection | Find damaged frames or audio dropouts |

| Color space | Confirm correct HDR/SDR metadata |

| Frame rate | Validate consistent timing |

Problems caught here save hours of wasted encoding time. A corrupted source file would produce corrupted outputs at every quality level.

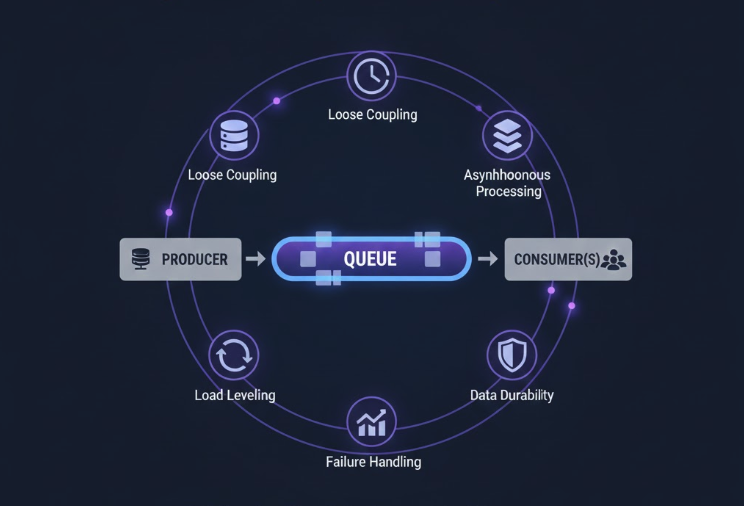

Developer lesson: Validate inputs before expensive processing. The earlier you catch problems, the cheaper they are to fix. This applies to any data pipeline, not just video. See Role of Queues in System Design for patterns on building robust processing pipelines.

Stage 2: Video Encoding

This is where the heavy lifting happens. Netflix needs to create multiple versions of each video optimized for different viewing conditions.

The Encoding Challenge

A viewer watching on a 4K TV with gigabit internet needs different video than someone watching on a phone over cellular data. Netflix creates an encoding ladder: a set of quality levels that cover all scenarios.

| Resolution | Typical Bitrate | Target Device |

|---|---|---|

| 4K HDR | 15+ Mbps | Smart TVs, high-end displays |

| 1080p | 5-8 Mbps | Laptops, tablets |

| 720p | 3-5 Mbps | Phones, slower connections |

| 480p | 1-2 Mbps | Very slow connections |

| 240p | 0.3-0.5 Mbps | Emergency fallback |

But here is the insight that changed everything: not all content needs the same bitrate to look good.

Per-Title Encoding

An animated movie like “Spider-Man: Into the Spider-Verse” has flat colors and sharp edges. A nature documentary like “Our Planet” has complex textures and constant motion. They have completely different encoding requirements.

Netflix pioneered per-title encoding. Instead of using a fixed encoding ladder for everything, they analyze each title and create a custom ladder.

flowchart TB

A1[fa:fa-film Source Video]

A2[fa:fa-chart-bar Complexity Scan]

A3[fa:fa-tachometer-alt Motion Detection]

Ladder{fa:fa-code-branch Encoding Decision}

L1[fa:fa-palette Animation]

L2[fa:fa-running Action Movie]

L3[fa:fa-tree Documentary]

R1[fa:fa-sliders-h Same Quality]

R2[fa:fa-database Less Storage]

R3[fa:fa-wifi Less Bandwidth]

A1 --> A2 --> A3 --> Ladder

Ladder --> L1

Ladder --> L2

Ladder --> L3

L1 --> R1

L2 --> R1

L3 --> R1

R1 --> R2 --> R3

style A1 fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style A2 fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style A3 fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style Ladder fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style L1 fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style L2 fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style L3 fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style R1 fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style R2 fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style R3 fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

The result: animated content might achieve excellent quality at 2 Mbps while an action movie needs 5 Mbps for the same perceived quality. Multiply this across thousands of titles and millions of viewers. The bandwidth savings are enormous.

Developer lesson: One-size-fits-all rarely works at scale. Analyze your workloads and optimize for their specific characteristics. This principle applies to database queries, API rate limits, and caching strategies too. See Caching Strategies Explained for examples.

The Cosmos Platform

Netflix rebuilt their video pipeline on a platform called Cosmos. It is their internal framework for building media processing microservices.

Why Microservices for Video?

The old monolithic system was becoming a bottleneck. Adding new codecs meant changing one massive codebase. Scaling up for a big release affected the entire system. And failures in one part could cascade everywhere.

Cosmos breaks video processing into independent services:

flowchart TB

QUEUE[fa:fa-stream Message Queue]

ORCH[fa:fa-sitemap Workflow Orchestrator]

VES[fa:fa-cogs Video Encoding]

AES[fa:fa-volume-up Audio Encoding]

TTS[fa:fa-closed-captioning Timed Text]

QAS[fa:fa-chart-line Quality Analysis]

STORE[fa:fa-database Object Storage]

QUEUE --> ORCH

ORCH --> VES

ORCH --> AES

ORCH --> TTS

VES --> QAS

AES --> QAS

TTS --> QAS

QAS --> STORE

style QUEUE fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style ORCH fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style VES fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style AES fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style TTS fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style QAS fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style STORE fill:#fff8e1,stroke:#f9a825,stroke-width:2px

Video Encoding Service (VES)

VES is the core encoding microservice. It receives chunks of video and encodes them according to specified parameters. Here is what makes it scalable:

| Feature | Benefit |

|---|---|

| Stateless processing | Any worker can handle any chunk |

| Containerized | Consistent environment across machines |

| Auto-scaling | Spin up more instances for big jobs |

| Failure isolation | One failed chunk does not kill the job |

The key insight is chunking. A 2-hour movie is not encoded as one giant job. It is split into small segments, maybe 10 seconds each. Each segment can be encoded independently on different machines.

sequenceDiagram

participant O as Orchestrator

participant Q as Queue

participant W1 as Worker 1

participant W2 as Worker 2

participant W3 as Worker 3

participant S as Storage

O->>Q: Split movie into 720 chunks

Q->>W1: Chunk 1-240

Q->>W2: Chunk 241-480

Q->>W3: Chunk 481-720

par Parallel Processing

W1->>S: Encoded chunks 1-240

W2->>S: Encoded chunks 241-480

W3->>S: Encoded chunks 481-720

end

S->>O: All chunks complete

O->>S: Assemble final video

A 2-hour movie with 10-second chunks means 720 encoding jobs. If each job takes 2 minutes on a single machine, sequential processing would take 24 hours. With 100 parallel workers, it takes about 15 minutes.

Developer lesson: Break big jobs into small, independent units. This pattern works for any batch processing: image resizing, data transformation, report generation. The key is making each unit stateless so any worker can process it. For a deeper look at distributed work patterns, see How Kafka Works.

VMAF: Measuring What Humans See

Traditional video quality metrics like PSNR (Peak Signal-to-Noise Ratio) measure mathematical differences between frames. But humans do not perceive quality mathematically.

Netflix developed VMAF (Video Multimethod Assessment Fusion) to predict how humans actually perceive video quality. They trained it on thousands of hours of subjective quality ratings from real viewers.

How VMAF Works

VMAF combines multiple quality indicators:

flowchart LR

subgraph Inputs["Quality Indicators"]

I1[fa:fa-eye Visual Difference]

I2[fa:fa-arrows-alt Motion Estimation]

I3[fa:fa-th Detail Loss]

end

subgraph Model["VMAF Model"]

ML[fa:fa-brain Machine Learning<br/>Fusion]

end

subgraph Output["Score"]

S[fa:fa-star 0-100 Scale]

end

I1 --> ML

I2 --> ML

I3 --> ML

ML --> S

style Inputs fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style Model fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style Output fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

A VMAF score of 93+ is considered excellent quality. Netflix uses VMAF at multiple points:

| Use Case | How VMAF Helps |

|---|---|

| Encoding decisions | Choose optimal bitrate for target quality |

| Codec comparison | Evaluate new codecs objectively |

| Quality monitoring | Detect problems in the pipeline |

| A/B testing | Measure impact of changes |

The model is open-source. Any developer can use VMAF to measure video quality in their own applications.

Developer lesson: Measure what actually matters to users, not just what is easy to measure. For APIs, that might be perceived latency instead of server response time. For search, that might be result relevance instead of query speed. See SLI, SLO, SLA Explained for how to define meaningful quality metrics.

Cloud Architecture on AWS

Netflix runs their video pipeline entirely on AWS. This gives them elasticity that would be impossible with fixed infrastructure.

Why Cloud for Video Processing

Video processing is bursty. When a new season drops, thousands of hours need encoding quickly. Between releases, demand drops significantly.

flowchart TB

subgraph Control["Control Plane"]

Lambda[fa:fa-bolt Lambda Triggers]

SQS[fa:fa-stream SQS Job Queue]

Lambda -->|1. Create jobs| SQS

end

subgraph Compute["Compute Layer"]

EC2[fa:fa-server EC2 Encoding Fleet]

Scale[fa:fa-arrows-alt-v Auto Scaling]

CW[fa:fa-chart-line CloudWatch Metrics]

Scale -.-> EC2

CW -.-> Scale

end

subgraph Storage["Storage Layer"]

S3in[fa:fa-cloud-upload-alt S3 Source Bucket]

S3out[fa:fa-cloud-download-alt S3 Output Bucket]

end

SQS -->|2. Pull jobs| EC2

S3in -->|3. Fetch source| EC2

EC2 -->|4. Store encoded| S3out

style Control fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

style Compute fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style Storage fill:#fff8e1,stroke:#f9a825,stroke-width:2px

How it works:

- New content triggers Lambda which creates encoding jobs

- Workers pull jobs from SQS queue

- Workers fetch source video from S3 input bucket

- Encoded chunks stored in S3 output bucket

- CloudWatch monitors queue depth and triggers Auto Scaling to add or remove workers

| AWS Service | Purpose |

|---|---|

| EC2 | Compute for encoding workers |

| S3 | Storage for source files and outputs |

| SQS | Job queues for work distribution |

| Auto Scaling | Adjust capacity based on queue depth |

Netflix does not need special hardware for most encoding. Standard EC2 instances handle the workload. They choose instance types based on the specific encoding needs: CPU-heavy for software encoding, GPU instances when hardware acceleration helps.

Developer lesson: Design for variable load from the start. If your workload is predictable, you can use reserved capacity. If it varies, embrace elasticity. See How Amazon S3 Works for how Netflix’s storage layer handles this scale.

Adaptive Bitrate Streaming

After encoding, Netflix needs to deliver video that adapts to changing network conditions. This is called adaptive bitrate (ABR) streaming.

How ABR Works

Instead of one video file, Netflix creates many versions at different quality levels. The player downloads small segments (typically 2-4 seconds) and can switch quality between segments based on network speed.

sequenceDiagram

participant P as Player

participant CDN as Netflix CDN

participant M as Manifest Server

P->>M: Request manifest

M->>P: Available quality levels

Note over P: Start with medium quality

P->>CDN: Segment 1 @ 1080p

CDN->>P: Video data

Note over P: Network slows down

P->>CDN: Segment 2 @ 720p

CDN->>P: Video data

Note over P: Network improves

P->>CDN: Segment 3 @ 1080p

CDN->>P: Video data

Note over P: Continue adapting...

Netflix supports both HLS (HTTP Live Streaming) and DASH (Dynamic Adaptive Streaming over HTTP) formats. The encoding pipeline produces segments in both formats to support all device types.

Open Connect: The Delivery Network

Encoding is only half the story. Getting video from Netflix’s servers to your screen efficiently requires their own CDN called Open Connect.

How Open Connect Works

Netflix places servers called Open Connect Appliances (OCAs) inside ISP networks around the world. Content is pre-positioned on these servers before you request it.

flowchart TB

subgraph Netflix["Netflix Cloud"]

PIPE[fa:fa-cogs Encoding Pipeline]

MASTER[fa:fa-database Master Storage]

end

subgraph OC["Open Connect CDN"]

OCA1[fa:fa-server OCA in ISP 1]

OCA2[fa:fa-server OCA in ISP 2]

OCA3[fa:fa-server OCA in ISP 3]

end

subgraph Users["Viewers"]

U1[fa:fa-tv User 1]

U2[fa:fa-mobile User 2]

U3[fa:fa-laptop User 3]

end

PIPE --> MASTER

MASTER -.->|Pre-position content| OCA1

MASTER -.->|Pre-position content| OCA2

MASTER -.->|Pre-position content| OCA3

OCA1 --> U1

OCA2 --> U2

OCA3 --> U3

style Netflix fill:#e3f2fd,stroke:#1565c0,stroke-width:2px

style OC fill:#fff8e1,stroke:#f9a825,stroke-width:2px

style Users fill:#e8f5e9,stroke:#388e3c,stroke-width:2px

When you hit play, Netflix’s control plane figures out which OCA has the content and is closest to you. The video comes from a server that might be in your ISP’s data center, not from a Netflix facility thousands of miles away.

| Benefit | Explanation |

|---|---|

| Lower latency | Server is physically closer |

| Better throughput | Less network congestion |

| Reduced costs | ISPs pay less for bandwidth |

| Higher reliability | Multiple OCAs provide redundancy |

Developer lesson: Move data closer to users. CDNs are not just for static files. Any data that is read more than written benefits from edge caching. See How Cloudflare Supports 55 Million Requests Per Second for another example of edge infrastructure.

Lessons for Software Developers

Netflix’s video pipeline offers patterns that apply far beyond video processing.

Lesson 1: Chunk Large Work

Do not process big jobs as monolithic tasks. Split them into independent chunks that can run in parallel.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

# Bad: Process entire video as one job

def encode_video(video_path):

# Takes 24 hours, can't parallelize

result = encode_full_video(video_path)

return result

# Good: Chunk and parallelize

def encode_video_parallel(video_path, num_workers=100):

chunks = split_into_segments(video_path, segment_duration=10)

# Each chunk processes independently

with ProcessPoolExecutor(max_workers=num_workers) as executor:

encoded_chunks = list(executor.map(encode_chunk, chunks))

return assemble_chunks(encoded_chunks)

This pattern works for any CPU-intensive batch job. Image processing, data transformation, report generation.

Lesson 2: Make Workers Stateless

Any worker should be able to handle any unit of work. Do not store state on the worker itself.

| Stateful (Bad) | Stateless (Good) |

|---|---|

| Worker keeps partial results in memory | All state in external storage |

| Failure loses progress | Failure just retries the chunk |

| Can not scale dynamically | Add or remove workers anytime |

| Specific worker for specific job | Any worker handles any job |

This is why Netflix can scale their encoding fleet up and down without coordination. Workers are interchangeable.

Lesson 3: Measure Quality Continuously

Do not just check quality at the end. Measure it at every step.

Netflix checks VMAF scores after each encoding stage. If quality degrades, they catch it immediately instead of discovering it after hours of downstream processing.

1

2

3

4

5

6

7

8

9

def encode_with_quality_gates(chunk, target_vmaf=93):

encoded = encode_chunk(chunk)

vmaf_score = measure_vmaf(chunk.original, encoded)

if vmaf_score < target_vmaf:

# Re-encode with higher bitrate

return encode_chunk(chunk, increase_bitrate=True)

return encoded

Apply this to your own systems. Validate data after each transformation. Check results match expectations before continuing. See How Stripe Prevents Double Payment for examples of quality gates in payment processing.

Lesson 4: Design for Variable Load

Build systems that handle both 10x traffic and 0.1x traffic gracefully.

Netflix’s pipeline sees massive spikes when new content arrives and quiet periods between releases. Their cloud architecture handles both by scaling compute resources up and down.

Key patterns:

- Use queues to absorb spikes

- Auto-scale based on queue depth

- Design for failure (some workers will crash)

- Make scaling fast (seconds, not minutes)

For queue patterns, see Role of Queues in System Design.

Lesson 5: Embrace Microservices for Complex Domains

Video processing has many distinct concerns: decoding, encoding, quality analysis, format conversion. Each is complex enough to be its own service.

Benefits Netflix sees from microservices:

| Benefit | How It Helps |

|---|---|

| Independent scaling | Scale encoding without scaling analysis |

| Isolated failures | Encoding crash does not kill ingestion |

| Easier updates | Deploy new codec without touching other services |

| Team autonomy | Different teams own different services |

But microservices add complexity. Do not adopt them just because Netflix does. Adopt them when your domain complexity justifies the operational overhead. See Modular Monolith Architecture for an alternative that works well for smaller teams.

Key Takeaways

-

Chunking enables parallelism. Netflix splits videos into segments so hundreds of machines can encode simultaneously. This reduces processing time from days to hours.

-

Per-content optimization beats one-size-fits-all. Each title gets a custom encoding ladder based on its complexity. Animated content uses less bandwidth than action movies at the same perceived quality.

-

VMAF measures what humans perceive. Netflix open-sourced their quality metric because traditional metrics did not predict user satisfaction. Measure what matters to users, not what is easy to measure.

-

Cloud enables elastic scaling. Fixed infrastructure cannot handle Netflix’s variable workload. Cloud lets them scale up for releases and scale down when idle.

-

Microservices isolate complexity. Video processing has many distinct concerns. Separating them into services makes each easier to develop, deploy, and scale.

-

Pre-positioning content reduces latency. Open Connect places videos in ISP networks before users request them. The best optimization is avoiding network round trips entirely.

-

Quality gates catch problems early. Checking quality after each step prevents wasted work. A corrupted source caught at ingestion saves hours of failed encoding.

Further Reading:

- Netflix Tech Blog: Video Processing Pipeline - Official deep dive from Netflix engineering

- How WhatsApp Scaled to Billions of Users - Another case study in building scalable systems

- Role of Queues in System Design - Patterns for handling variable load

- How Kafka Works - Distributed streaming platform used in media pipelines

- Caching Strategies Explained - Reduce processing by caching results

- System Design Cheat Sheet - Quick reference for scaling patterns

References: Netflix Tech Blog - Rebuilding Video Pipeline, Netflix Tech Blog - High Quality Encoding, Netflix Tech Blog - VES Microservice, Netflix Tech Blog - VMAF at Scale