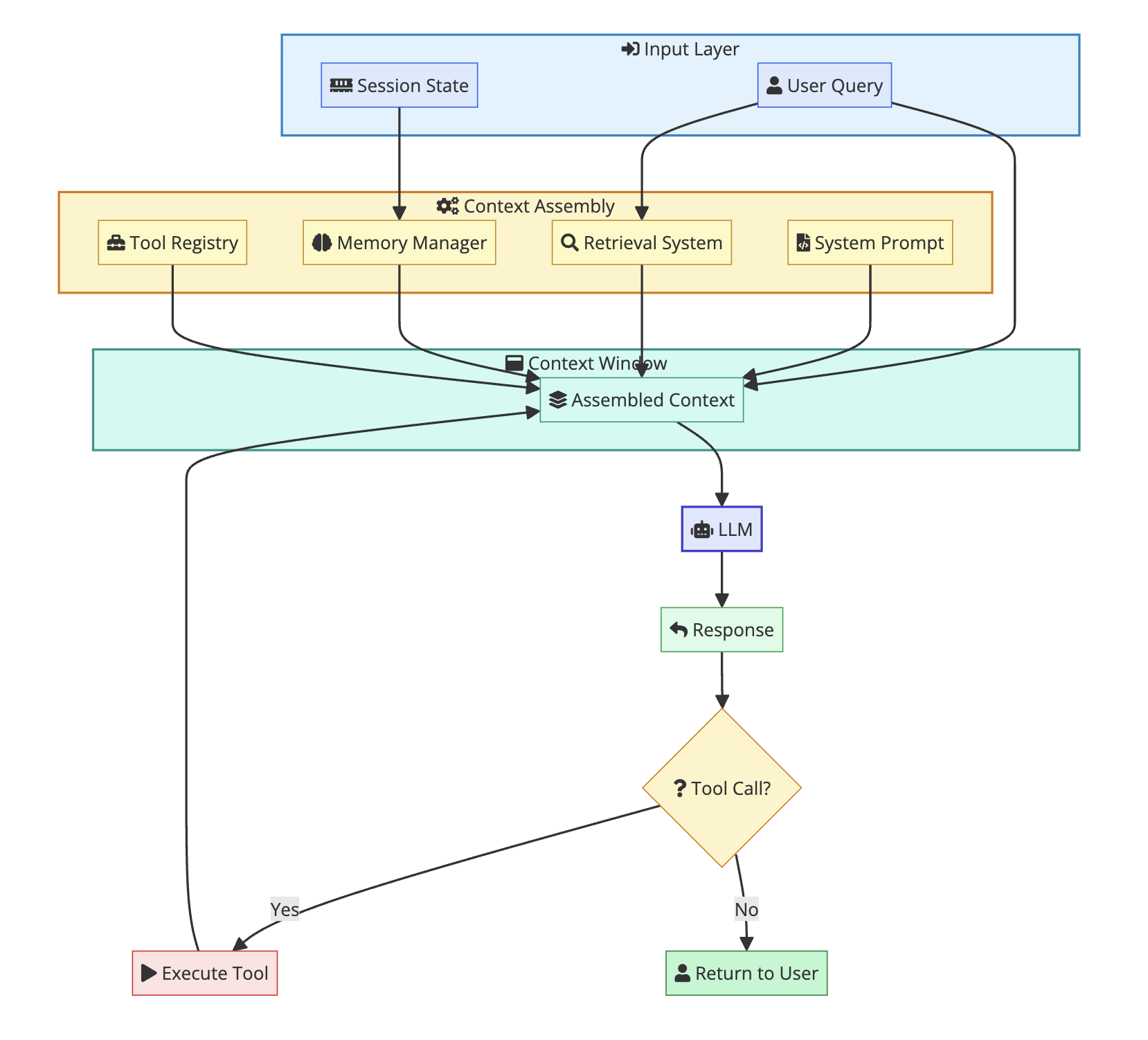

Context engineering = designing what information AI sees. Components: system instructions (behavior), RAG (retrieved docs), memory (conversation history), tools (capabilities), user query. Prompt engineering is a subset. The real skill is dynamically loading the right context within token limits.

You have probably heard of prompt engineering. Write better prompts, get better results. That advice worked when we were using ChatGPT for simple tasks. But if you are building real AI applications, prompt engineering is just the tip of the iceberg.

The real skill is context engineering.

Context engineering is the art of providing AI systems with the right information at the right time. It is not just about how you ask. It is about everything you give the AI to work with. The documents you retrieve. The conversation history you keep. The tools you make available. The structure of all that information.

This matters because AI models only know what you tell them. They do not have access to your codebase, your database, or your company wiki unless you give it to them. The difference between a mediocre AI application and a great one often comes down to how well you engineer that context.

TL;DR: Context engineering is the practice of providing AI systems with the right information at the right time. It includes system prompts, retrieved documents (RAG), conversation memory, tool definitions, and user queries. Unlike prompt engineering which focuses on how you ask, context engineering focuses on everything the AI sees. Master this skill to build AI applications that actually work.

Why Context Engineering Matters More Than Prompts

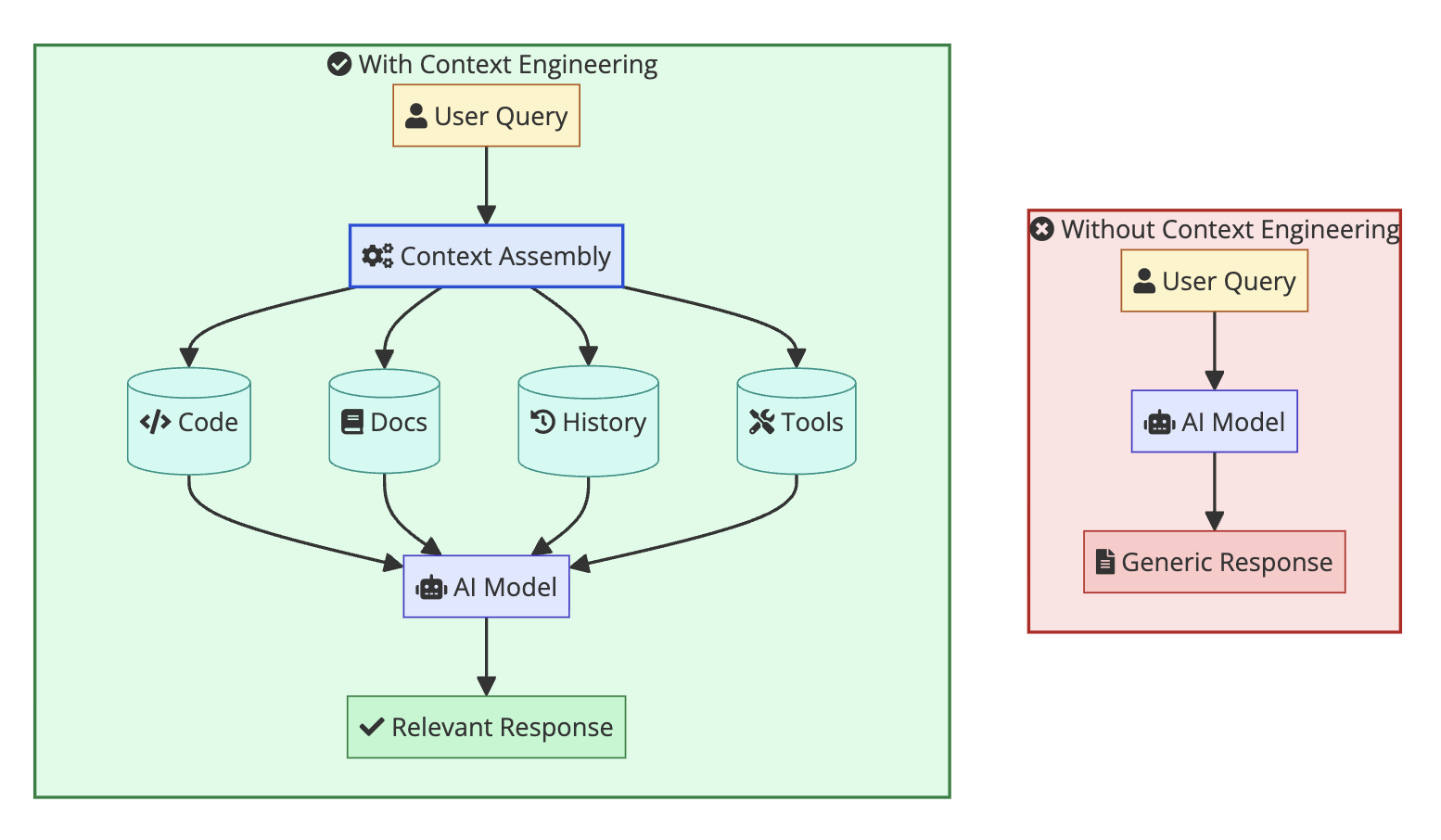

Here is a simple example. You ask an AI coding assistant to add a new feature to your project. Two scenarios:

Scenario A: You give it just a prompt describing the feature.

Scenario B: You give it the same prompt plus your project structure, existing code patterns, test conventions, and documentation about related features.

Same prompt. Completely different results. Scenario B will produce code that fits your project. Scenario A will produce generic code that you have to rewrite.

This is what context engineering solves. It is the system that gathers, filters, and structures information so the AI can actually help you.

The Building Blocks of Context

Every piece of information you send to an AI model is context. Let us break down the components.

System Instructions

These define how the AI should behave. Think of them as the rules of engagement. They include the persona, constraints, output format requirements, and any specific instructions for your use case.

For a code review assistant, your system instructions might specify that it should focus on security issues, follow your team’s style guide, and format responses in a specific way.

Retrieved Information

This is where RAG (Retrieval Augmented Generation) comes in. Instead of hoping the AI knows about your domain, you search for relevant information and include it in the context.

When a user asks about a specific API endpoint, you retrieve the documentation for that endpoint. When they ask about a bug, you retrieve the relevant code files and error logs. This retrieved information grounds the AI’s response in facts.

Conversation Memory

Past messages in the conversation provide continuity. But you cannot keep everything forever. Context windows have limits. You need strategies for what to keep, what to summarize, and what to drop.

Tool Definitions

Modern AI agents can call tools. Search databases. Execute code. Call APIs. Each tool needs a description so the AI knows when and how to use it. These definitions are part of your context.

User Query

Finally, the actual question or task from the user. This seems obvious, but how you structure and position the user query within the overall context affects how the AI processes it.

The Architecture of Context

Here is how these pieces fit together in a typical AI application:

The context assembly layer is where context engineering happens. It takes the user query, figures out what information is relevant, retrieves it from various sources, and packages it all into a format the AI can use.

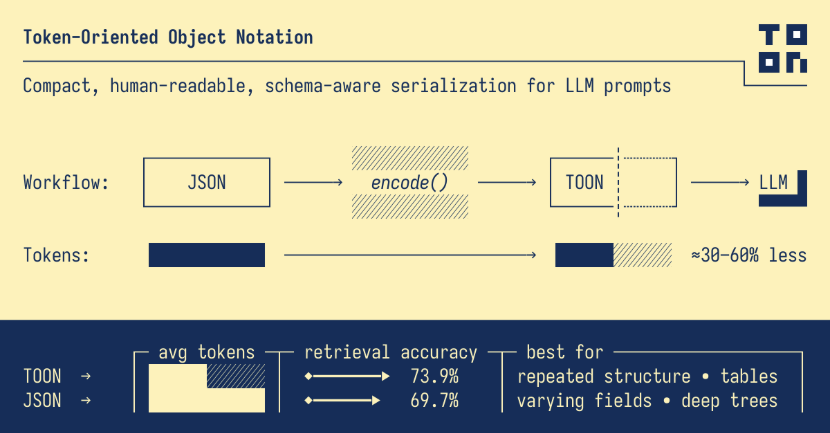

The Context Window Problem

Every AI model has a context window. This is the maximum amount of text it can process at once. Older models had 4K or 8K tokens. Newer ones go up to 128K or even 1M tokens.

But bigger is not always better.

| Window Size | Pros | Cons |

|---|---|---|

| Small (4K-8K) | Cheap, fast | Limited information |

| Medium (32K-128K) | Good balance | Moderate cost |

| Large (200K+) | Lots of context | Expensive, slower, may miss important details |

Research shows that putting everything in a huge context window can hurt performance. The AI may miss important details buried in the middle. It is called the “lost in the middle” problem. Models tend to pay more attention to the beginning and end of the context.

This is why context engineering is about quality over quantity. It is not about dumping everything in. It is about selecting and structuring the right information.

Practical Techniques

1. Semantic Chunking

Instead of splitting documents by fixed size, split them by meaning. Keep related information together. A function and its docstring should be in the same chunk. A section of documentation should stay intact.

This improves retrieval quality. When the user asks about a topic, you get complete, coherent pieces of information rather than fragments that start and end mid-sentence.

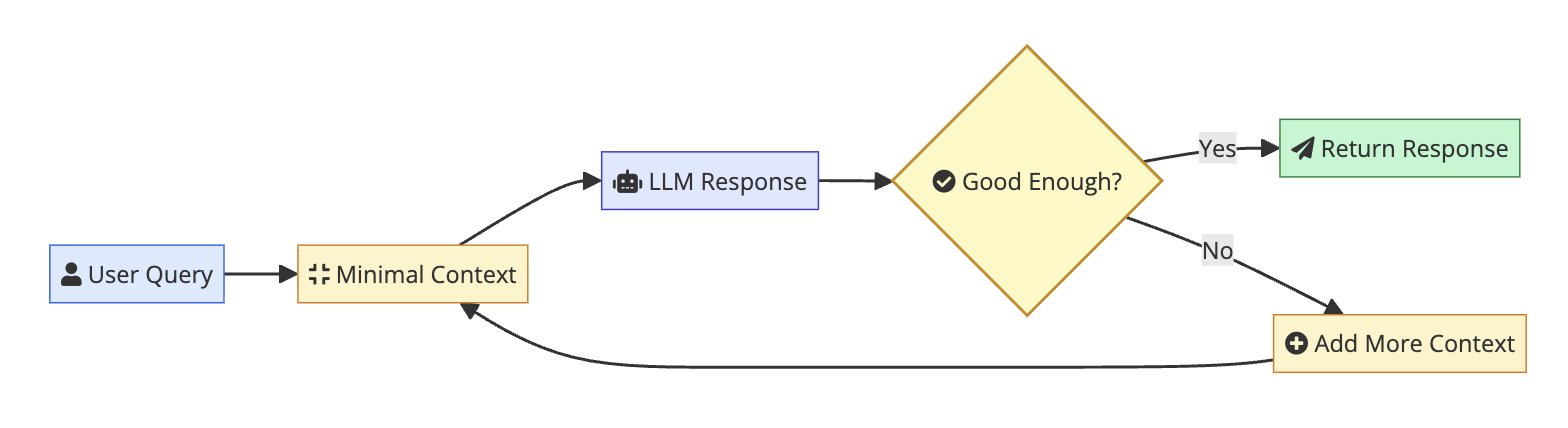

2. Progressive Context Loading

Start with minimal context. If the initial response is not good enough, add more context and try again. This saves tokens and cost for simple queries while still handling complex ones.

3. Context Compression

Summarize older conversation history instead of keeping every message. Extract key entities and facts from long documents. Remove boilerplate and keep the signal.

Some techniques:

| Technique | Use Case |

|---|---|

| Entity extraction | Pull out key names, dates, values |

| Summarization | Condense long documents or history |

| Deduplication | Remove repeated information |

| Schema enforcement | Keep only structured relevant fields |

4. Sliding Window Memory

For conversations, maintain different windows for different purposes:

- Immediate context: Last few turns, full detail

- Recent history: Summarized version of the last session

- Long term memory: Key facts stored in a database, retrieved when relevant

5. Smart Caching

If parts of your context are stable (system prompts, common documentation), cache them. Many APIs support prefix caching that reduces cost and latency for repeated context.

Context Engineering for Different Use Cases

AI Coding Assistants

Tools like Cursor, GitHub Copilot, and Windsurf are essentially context engineering systems. They figure out what code to include in context. The open file. Related files. The test file. The type definitions. Recent edits.

The quality of their suggestions depends heavily on how well they select and structure this context.

Customer Support Bots

Good support bots retrieve relevant knowledge base articles, past ticket history for the customer, and product documentation. Bad ones just have a generic prompt and hope for the best.

RAG Applications

The whole point of RAG is context engineering. You are retrieving relevant documents and putting them in context for the AI to use. The retrieval strategy, chunk size, number of chunks, and how you format them all matter.

AI Agents

Agents that can use tools need context about what tools are available and when to use them. As they work through multi-step tasks, they accumulate context from previous steps. Managing this growing context is critical.

Common Mistakes

Stuffing too much in: More context is not always better. Include what is relevant, leave out what is not. A focused context often outperforms a bloated one.

Ignoring structure: How you organize the context matters. Put the most important information where the model pays attention. Use clear separators and labels.

Static context: Real applications need dynamic context that changes based on the query. A single system prompt is rarely enough.

No feedback loop: You need to measure what works. Track which retrieved documents actually get used. Adjust your retrieval strategy based on results.

Forgetting security: Context can include sensitive data. Make sure you are not leaking private information to the model or in logs.

Security and Privacy

Context engineering introduces security considerations:

Data minimization: Only include data the AI needs. Do not dump entire databases into context just because you can.

Access controls: The AI should not see data the user is not authorized to access. Your retrieval system needs to respect permissions.

Prompt injection: Malicious content in your retrieved documents could try to override your system instructions. Sanitize and validate what goes into context.

Audit logging: Keep track of what context was used for each request. You may need this for compliance or debugging.

Measuring Success

How do you know if your context engineering is working? Track these metrics:

| Metric | What It Tells You |

|---|---|

| Context size per request | Are you efficient with tokens? |

| Retrieval relevance scores | Is your retrieval finding useful information? |

| Response accuracy | Is the AI giving correct answers? |

| Cache hit rates | Are you saving costs with caching? |

| User satisfaction | Are users actually helped? |

A/B test different context strategies. Try different numbers of retrieved documents. Experiment with context ordering. The right approach depends on your specific use case.

The Future

Context engineering is becoming more important as AI applications get more complex. Simple chat is giving way to agents that work on multi-step tasks, use tools, and operate autonomously for longer periods.

These systems need sophisticated context management. The context grows over time. Relevance changes as the task progresses. Memory needs to persist across sessions.

We are also seeing context windows get bigger, but that does not solve everything. It shifts the problem from “how do I fit this in” to “how do I organize this so the AI can use it effectively.”

The developers who master context engineering will build AI applications that actually work. The ones who focus only on prompts will keep wondering why their results are inconsistent.

Getting Started

If you are building AI applications, start thinking about context as a first class concern:

-

Map your information sources: What data does the AI need to do its job? Codebase, documentation, user history, external APIs?

-

Design your retrieval strategy: How will you find relevant information for each query? Semantic search, keyword matching, rules based?

-

Plan your context structure: How will you organize system instructions, retrieved data, history, and tools? What goes where?

-

Set your limits: How much context can you afford (tokens cost money)? How will you prioritize when space is limited?

-

Build feedback loops: How will you know what is working? What will you measure and iterate on?

Context engineering is not a one time task. It is an ongoing practice of refining how your AI systems get the information they need.

Further Reading:

- Understanding Context Engineering by Architecture and Governance

- Four Important Lessons About Context Engineering by InfoWorld

- Context Engineering in Practice by Inngest

- What is Context Engineering? by Cognizant

- Context Engineering Blog by ContextEngineering.ai