Git Config Guide: Every Setting You Need to Know

Configure Git the right way and never think about it again

Search across 187 posts, 22 explainers, and 76 topics

Configure Git the right way and never think about it again

The practical guide to Git commands you will actually use at work

A practical guide to agent architecture, tool calling, and the patterns that matter

What every developer needs to know about feeding AI the right information

23 battle tested solutions that every developer should know

The voting pattern that keeps your distributed data consistent and your servers from fighting

Master the command line with these practical Linux commands every developer should know

Stop guessing at regular expressions and start writing them with confidence

The complete guide to cache patterns that every backend developer should know

Separate your reads from writes and stop fighting with your database

The library that brings HTML back to life and makes you question everything you thought you knew about frontend development

Inside Google Spanner, the database that broke the rules of distributed systems

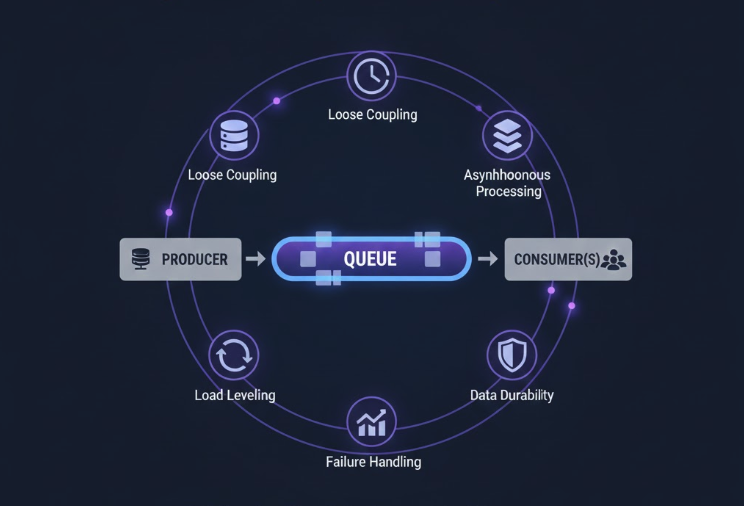

How message queues help build scalable, resilient systems that don't fall apart under pressure

Inside the distributed storage system that powers Netflix, Airbnb, and most of the internet